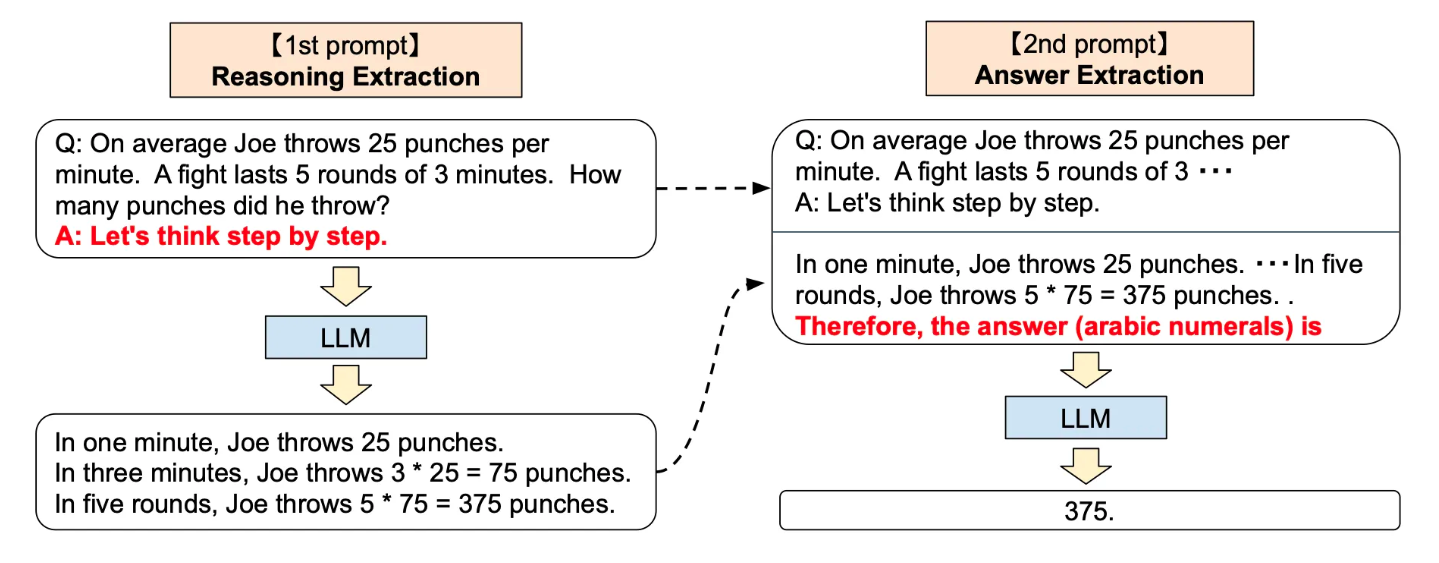

To improve the LLM’s reasoning and accuracy, one can use the concept of chain of thought. It tells the LLM to show how they thought in the response and it can increase the accuracy of the answer.

To execute this, one can provide a one-shot prompt (see: Improve LLM Response using Examples and Shots) with chain of thought answer in the prompt.

# GOOD EXAMPLE FOR CHAIN OF THOUGHTS

good_question = """Q: Roger has 5 tennis balls. He buys 2 more cans of tennis balls.

Each can has 3 tennis balls. How many tennis balls does he have now?

A: Roger started with 5 balls. 2 cans of 3 tennis balls

each is 6 tennis balls. 5 + 6 = 11. The answer is 11.

Q: The cafeteria had 23 apples.

If they used 20 to make lunch and bought 6 more, how many apples do they have?

A:"""

# BAD EXAMPLE

bad_question = """Q: Roger has 5 tennis balls. He buys 2 more cans of tennis balls.

Each can has 3 tennis balls. How many tennis balls does he have now?

A: The answer is 11.

Q: The cafeteria had 23 apples.

If they used 20 to make lunch and bought 6 more, how many apples do they have?

A:"""

llm.predict(good_question)